About this project

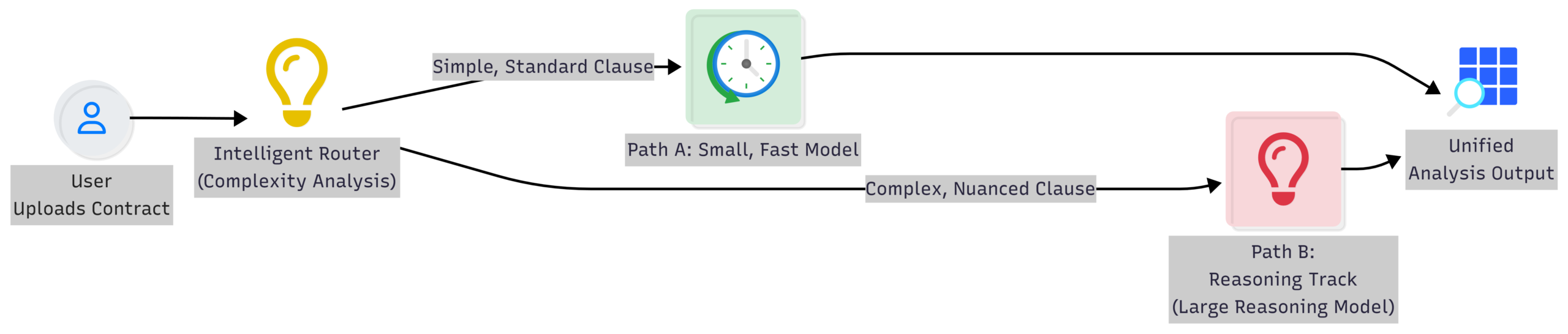

This project develops and evaluates a high-performance, AI-powered engine for the automated analysis and red-flag detection of legal documents, including public tenders and contracts. Using PDF documents and dynamic JSON rulebooks, the system is benchmarked by comparing multiple information extraction architectures, including Retrieval-Augmented Generation (RAG) and full-context agent-based reasoning. The research measures accuracy across different foundational LLM models (e.g., Llama 3, Mistral) and evaluates the impact of advanced prompt design on analytical quality. A key deliverable is determining the best method for verifiability by linking AI-generated information to its source text. This hybrid, data-driven approach enables the creation of a trustworthy legal review tool and establishes a continuous learning algorithm, allowing human experts to correct and improve the system’s accuracy over time. This project also involves performance and result benchmarking among LLMs and how they interpret legal and contract-related data.

Discover the team behind this project